Augmented Reality

Abstract Information

Augmented Reality is a live direct or indirect view of a physical, real world environment whose elements are augmented by computer generated sensory input such as sound, graphics or GPS data. AR is related to a more general concept called Mediated Reality, in which a view of reality is modified (possibly even diminished rather than augmented) by a computer. As a result, the technology functions by enhancing one’s current perception of reality. In Contrast, Virtual Reality replaces the real world with a simulated one. AR, augmentation is conventionally in real-time and in semantic context with environmental elements, for example sports scores on TV during a match. With the help of advanced AR technology (Adding computer vision and object recognition) the information about the surrounding real world of the user becomes interactive and digitally manipulabe. Information about the environment and its objects is overlaid on the real world. AR is a digital overlay on top of the real world, consisting of computer graphics, text, video, and audio, which is interactive in real time. This is experienced through a smartphone, tablet, computer, or AR eyewear equipped with software and a camera. Examples of AR today include the translation of signs or menus into the language of your choice, pointing at and identifying stars and planets in the night sky, and delving deeper into a museum exhibit with an interactive AR guide. AR presents the opportunity to better understand and experience our world in unprecedented ways. Unlike virtual reality (VR), augmented reality (AR) provides a gateway to a new dimension without the need to leave our physical world behind. We still see the real world around us in AR, whereas in VR, the real world is completely blocked out and replaced by a new world that immerses the user in a computer generated environment.

How it all Started

The definition of AR came from the Ronald Azuma in late 1997 when he established the three main elements that define Augmented reality:

- It connects real and the virtual worlds

- It is interactive in real time

- It allows movement in 3D

It is very important that you do not confuse augmented reality with virtual reality because these technologies are just not the same. The crucial idea is to overlay digitized information on the real (offline/online) world.

Technology

Hardware components of AR are: processor, display, sensors and input devices. Modern mobile computing devices like smartphones and tablet computers contain these elements which often include a cameras and MEMS (Micro Electro Mechanical Systems) like accelerator, GPS and solid state compass makes the platform suitable for AR.

Display Devices

AR rendering includes Optical Projection Systems, Monitors, handheld devices and display systems worn on the human body.

Head Mounted Devices/Displays(HMD)

A HMD is a display device paired to a headset such as a helmet. HMD’s place images of both the physical and the virtual objects over the user’s fields of view. Modern HMD’s often employ sensors for six degrees of freedom monitoring that allow the system to align virtual information to the physical world and adjust accordingly with the user’s head movement (like Head Tracking). HMD’s can provide users immersive, mobile and collaborative AR experiences.

Handheld Devices

Graphics, Audio-visual and Interaction (GAI) based mobile Augmented Reality is a Human Computer Interaction technology where user can view the multimedia content (like video, 2D, 3D, text, animation) with audio visuals in augmented environment. GAI based mobile AR system allows user to develop their own Augmented Reality applications and games. This system based on Symbian and Android Smartphone where the users can use their Smartphone’s camera for real time video capturing and rendering virtual object augmented environments. Users can interact and control the virtual objects by touch in touch enable phone or by button in non-touch phone. The general purpose of this technology is to introduce multimedia base Mobile Augmented Reality to user.

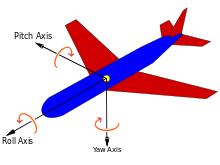

Degrees of Freedom

It refers to the movement of a rigid body in 3D Space. Specifically, the body is free to change position as forward/backward called as surge, up/down called as heave, left/right called as sway translation in three particular axes, combined with changes in Swiveling left/right called as Yaw, tilting forward/backward called as pitch, pivoting side to side called as rollorientation through rotation in three perpendicular axes (Together called as six degrees of freedom of a rigid body).

Formulating the six degrees of freedom, Counting the number of parameters that define the configuration of a set of rigid bodies that are constrained by joints connecting these bodies. Consider a system of n rigid bodies moving in space has 6n degrees of freedom to measured relative to the fixed frame. In order to count the degrees of freedom of this system, including the ground frame in the count of bodies, so that mobility is independent of the choice of the body that forms the fixed frame. Then the degree of freedom of the unconstrained system of N=n+1 is (Mobility Formula) M = 6n = 6(N-1), as the fixed body has zero degrees of freedom relative to itself.

Geodesy theory

(Geodesy theory is based on the book: A. Jagielski, Geodezja I, GEODPIS , 2005.) The most important question is how to detect points of interest and present them on the screen. Image recognition algorithms might be what comes to mind, but let’s be realistic – they’re almost impossible to implement on a mobile device. So we look to other sciences for an answer. It turns out that geodesy offers us a simple solution. Wikipedia explains the azimuthal angle as: “The azimuth is the angle formed between a reference direction (North) and a line from the observer to a point of interest projected on the same plane as the reference direction orthogonal to the zenith”

So basically what we need to do is attempt to recognize the destination point by comparing the azimuth calculated from the basic properties of a right-angle triangle and the actual azimuth the device is pointing to. Let’s list down what we need to achieve this:

- get the GPS location of the device

- get the GPS location of destination point

- calculate the theoretical azimuth based on GPS data

- get the real azimuth of the device

- compare both azimuths based on accuracy and call an event

Now the question is how to calculate the azimuth. It turns out to be quite trivial because we will ignore the Earth’s curvature and treat it as a flat surface:

As you can see, we have a simple right-angle triangle and we can calculate the angle ᵠ between points A and B from the simple equation:

Sample Implementation of an Android Application:

Here we do not describe how to get the location and azimuth orientation of the device because this is very well documented and there are a lot of tutorials available online. Particularly what we should know is all about Sensors overview and the Locations Strategies. Once you’ve prepared data from the sensors, it’s time to implement CameraViewActivity. The first and most important thing is to implement SurfaceHolder.Callback to cast an image from the camera to our layout. SurfaceHolder.Callback implements the three methods responsible for this: surfaceChanged() surfaceCreated()and surfaceDestroyed().

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width,int height) {

if (isCameraviewOn) {

mCamera.stopPreview();

isCameraviewOn = false;

}

if (mCamera != null) {

try {

mCamera.setPreviewDisplay(surfaceHolder);

mCamera.startPreview();

isCameraviewOn = true;

} catch (IOException e) {

e.printStackTrace();

}

}

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

mCamera.stopPreview();

mCamera.release();

mCamera = null;

isCameraviewOn = false;

}

@Override

Public void surfaceCreated(SurfaceHolder holder) {

mCamera = Camera.open();

mCamera.setDisplayOrientation(90);

}

Later we need to connect to the sensor data providers. In this case, this just means implementing and initializing proper listeners from OnLocationChangedListener and OnAzimuthChangedListener. Using the current location, the theoretical azimuth will be calculated. Based on the orientation of the phone, we need to calculate the accuracy, and then compare the current angle and the azimuth theoretical angle, which brings the augmented object on to the acquired pointer. Using the above process, an augmented object can be placed on an azimuth location at any point of the user location, which makes the user who wants to implement something related to augmented reality very easy compared to the regular existing environments. Some of the major limitations that a user has to think about what implementing this way is the Sensor accuracy, which is unfortunately not perfect mainly because of the magnetic field around the device which is created by the device itself.

Latest Achievements

- In January 2015, Meta launched a $23 million project led by Horizons Ventures, Tim Draper, Alexis Ohanian, BOE Optoelectronics and Garry Tan. On February 17, 2016, Meta announced their second-generation product at TED, Meta 2. The Meta 2 head-mounted display headset uses a sensory array for hand interactions and positional tracking, visual field view of 90 degrees (diagonal), and resolution display of 2560 x 1440 (20 pixels per degree), which is considered the largest field view (FOV) currently available.

- On November 15, 2012 Ingress was first released exclusively for Android devices and was made available for Apple’s iOS on July 14, 2014, an augmented-reality (MMOG) massively multiplayer online location-based game developed by Niantic, originally part of Google. and was made available for Apple’s iOS on July 14, 2014. The game has a science fiction back story with a continuous open narrative. Ingress was also considered to be a location-based exergame.

- Google Glass’s VIPAAR, an augmented reality in the medical profession. A virtual display appearing before a doctor onto an awaiting patients weak body, directing his every surgical move with pinpoint accuracy, all whilst monitoring the patient’s life signs through wireless connectivity and letting the doctor know every key piece of the complicated puzzle that is a surgical procedure.

- On August 25th of 2014 Ikea’s Catalogue App was launched, if you aren’t familiar with Swedish furniture titans Ikea, it has 298 stores in 26 countries, earned 27.6 billion euros in revenue last year (1.3 billion of that from Ikea Food alone) and is easily regarded as one of the top 3 furniture retailers worldwide. Ikea allows customers to shop in the comfort of their own home through its Augmented Reality app, placing virtual furniture in the reality of your living room. All the user has to do is place an Ikea catalogue on the floor wherever they want to see furniture appear, pick a piece of furniture from the apps list of over 90 products and watch as it magically appears before your very screen.

Concluding Lines

We are at a moment where we are also seeing a shift from AR as a layer on top of reality to a more immersive contextual experience that combines things like wearable computing, machine learning, and the Internet of Things (IoT). We are moving beyond an experience of holding up our smartphones and seeing three-dimensional animations like dinosaurs appear to examples of assistive technology that help the blind to see and navigate their surroundings. AR is life changing, and there is extreme potential here to design experiences that surpass gimmickry and have a positive effect on humanity. This new wave of AR that combines IoT, big data, and wearable computing also has an incredible opportunity to connect people and create meaningful experiences, whether it’s across distances or being face to face with someone. The future of these new experiences is for us to imagine and build. Reality will be augmented in never-before-seen ways. What do you want it to look like and what role will you play in defining it?

June 9, 2018 at 5:58 pm

Excellent goods from you, man. I’ve understand your stuff previous to and you are just too excellent. I actually like what you’ve acquired here, certainly like what you are stating and the way in which you say it. You make it enjoyable and you still take care of to keep it sensible. I can not wait to read far more from you. This is really a terrific web site.

August 2, 2018 at 7:02 pm

This website can be a stroll-by way of for all of the information you needed about this and didn’t know who to ask. Glimpse here, and also you’ll undoubtedly uncover it.

September 7, 2018 at 10:30 am

Thanks for the great post

November 12, 2018 at 5:58 pm

I simply must tell you that you have an excellent and unique post that I kinda enjoyed reading.

January 9, 2019 at 12:42 am

You are a very capable individual!

January 15, 2019 at 4:37 pm

Having read this I believed it was very enlightening.

I appreciate you spending some time and energy to put this content together.

I once again find myself spending way too much time both reading and leaving comments.

But so what, it was still worthwhile!